Commission, December 19, 2019, No M.9424

EUROPEAN COMMISSION

Decision

NVIDIA / MELLANOX

Subject: Case M.9424 – NVIDIA / MELLANOX

Commission decision pursuant to Article 6(1)(b) of Council Regulation No 139/2004 (1) and Article 57 of the Agreement on the European Economic Area (2)

Dear Sir or Madam,

(1) On 14 November 2019, following a referral pursuant to Article 4(5) of the Merger Regulation, the European Commission received notification of a proposed concentration pursuant to Article 4 of the Merger Regulation by which NVIDIA Corporation (“NVIDIA”, USA) intends to acquire within the meaning of Article 3(1)(b) of the Merger Regulation control of Mellanox Technologies, Ltd. (“Mellanox”, Israel) by way of purchase of shares (the “Transaction”) (3). NVIDIA is designated hereinafter as the “Notifying Party”, and NVIDIA and Mellanox are together referred to as the “Parties”, while the undertaking resulting from the Transaction is referred to as the "Merged Entity".

1. THE PARTIES

(2) NVIDIA is a publicly traded Delaware Corporation, founded in 1993 and headquartered in Santa Clara, California. NVIDIA invented the graphics processing unit (“GPU”) in 1999. NVIDIA specializes in markets in which GPU-based visual computing and accelerated computing platforms can provide enhanced throughput for applications. NVIDIA’s products address four distinct areas: gaming, professional visualization, datacentre, and automotive. Only the datacentre area is relevant to the Transaction with Mellanox, as datacentre customers are the only ones also procuring components from Mellanox. In addition to GPU cards, NVIDIA produces software called “NVIDIA GRID” that allows computers that do not have their own GPU to use “virtual GPUs”. Moreover, it offers one family of server systems (DGX-1, DGX-2, and DGX-Station) that perform GPU-accelerated Artificial Intelligence (“AI”) and deep learning training and inference applications. Finally, NVIDIA also offers the NGC Software Hub, a cloud-based software repository for AI developers that provides deep learning software stacks.

(3) Mellanox is a publicly held corporation, founded in 1999 and headquartered in Sunnyvale, California and Yokneam (Israel). Mellanox offers network interconnect products and solutions that facilitate efficient data transmission between servers, storage systems and communications infrastructure equipment within datacentres, based on two network interconnect protocols: Ethernet and InfiniBand. Mellanox’s network interconnect components include the following: network interface cards (“NICs” or “network adapters”), switches and routers, cables, and related software.

2. THE CONCENTRATION

(4) On 10 March 2019, NVIDIA, NVIDIA International Holdings Inc., (“NVIDIA Holdings”, USA), Teal Barvaz Ltd. (“Teal Barvaz”, Israel) and Mellanox entered into an Agreement and Plan of Merger (“Merger Agreement”). NVIDIA Holdings is a wholly-owned subsidiary of NVIDA. Teal Barvaz is a wholly-owned subsidiary of NVIDIA Holdings.

(5) Pursuant to the Merger Agreement, the Transaction will be implemented as follows: Teal Barvaz (Merger Sub) merges with and into Mellanox with Mellanox being the surviving entity, following which Teal Barvaz will cease to exist, and Mellanox will become a wholly owned subsidiary of NVIDIA Holdings. Each of Mellanox’s shares will be transferred to NVIDIA Holdings in exchange for the right to receive an amount in cash equal to USD 125 (approximately EUR 106), representing a total acquisition value of approximately USD 6 900 million (approximately EUR 5 800 million) to be paid by NVIDIA.

(6) Therefore, NVIDIA (via NVIDIA Holdings) will acquire sole control over Mellanox and the Transaction constitutes a concentration within the meaning of Article 3(1)(b) of the Merger Regulation.

3. UNION DIMENSION

(7) The Transaction does not have a Union dimension within the meaning of Article 1(2) or Article 1(3) of the Merger Regulation as the EU turnover of one of the Parties (Mellanox) in the last financial year for which data is available at the date of notification amounted to EUR […].

(8) On 14 June 2019, the Notifying Party informed the Commission by means of a reasoned submission that the Commission should examine the Transaction pursuant to Article 4(5) of the Merger Regulation. The Commission transmitted a copy of that reasoned submission to the Member States on 14 June 2019.

(9) In fact, the Transaction fulfils the two conditions set out in Article 4(5) of the Merger Regulation since it is a concentration within the meaning of Article 3 of the Merger Regulation and it is capable of being reviewed under the national competition laws of three Member States, namely Czechia, Germany and Hungary.

(10) As none of the Member States competent to review the Transaction expressed its disagreement as regards the request to refer the case, the Transaction is deemed to have a Union dimension pursuant to Article 4(5) of the Merger Regulation.

4. RELEVANT MARKETS

4.1. Introduction

(11) The Transaction concerns key components used in datacentre servers, in particular those used for high performance computing (“HPC”; also sometimes referred to as “supercomputing”). HPC datacentres deliver the computation power required for research and innovations in a number of key developing areas such as autonomous driving, weather forecast, oil exploration and astrophysics. In particular, HPC datacentres are key enablers for many AI applications. Both Parties supply different components that can be used in datacentre servers.

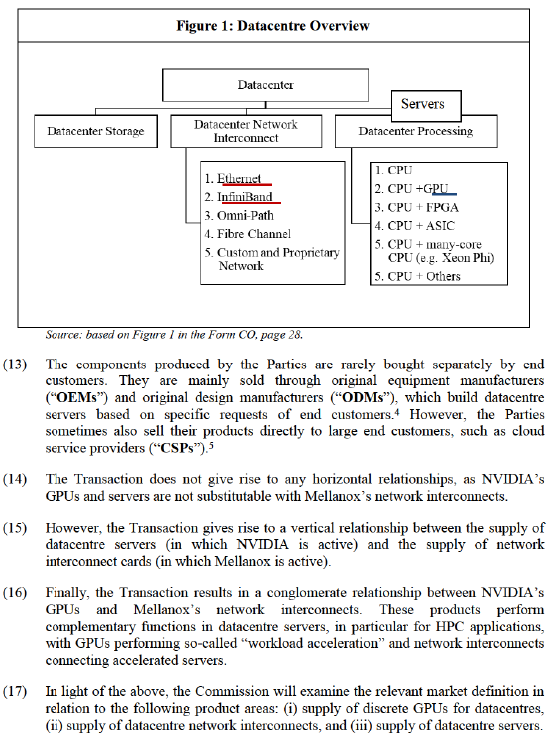

(12) Datacentres are a collection of servers that are connected by a network and that work together to process/compute workloads. Datacentres in general have three fundamental elements: (1) storage/memory, (2) network interconnect, and (3) processing/computing included in the servers, as Figure 1 illustrates.

4.2. Discrete GPUs for datacentres

4.2.1. Introduction

(18) GPUs are specialised semiconductor devices that are optimized for processing graphic images. They are made available to customers either as standalone, or “discrete”, semiconductor devices or as integrated components of chips that contain other components, including a central processing unit (“CPU”). In datacentres, GPUs are used to accelerate the datacentre workload computing/processing. They are only used in datacentres that require acceleration. They are necessarily used in addition to CPUs, which are always present in datacentre servers.

(19) When CPUs and GPUs are combined in a datacentre server, they carry out complementary computational tasks. CPUs operate as the general purpose centralised “brains” of computer systems. They are able to perform all types of operations. In contrast, GPUs – although they have more limited computational capabilities – are much better suited to process graphic images or computations that require massive parallel execution of relatively simple computational tasks. This is why GPUs are increasingly used in HPC and key AI applications, which both require massive parallel execution of rudimentary arithmetic operations.

(20) Other types of accelerators are sometimes used in datacentre servers: (1) field programmable gate arrays (“FPGAs”); (2) application specific integrated circuits (“ASICs”); (3) CPUs with integrated GPUs or many-core CPUs (as opposed to the standalone/discrete GPUs offered by NVIDIA).

4.2.2. Product market definition

4.2.2.1. Commission precedents

(21) The Commission has not assessed the boundaries of the market for discrete GPUs for datacentres in past decisions. However, in its 2011 Intel/McAfee decision, (6) the Commission has defined a separate market for CPUs based on the x86 architecture ("x86 CPUs"), in which GPUs and other accelerators were not included. In its 2015 Intel/Altera decision, the Commission also defined a separate market for FPGAs, distinct from other complex programmable logic devices (“CPLDs”) and from GPUs. (7)

4.2.2.2. Notifying Party’s views

(22) The Notifying Party submits that the relevant product market is the market for datacentre processing, which includes all types of processing, i.e., GPUs, as well as CPUs, FPGAs, ASICs (including ASICs developed in-house by companies, e.g., Google’s Tensor Processing Unit (“TPU”)) and any other processors/accelerators for datacentres. (8)

(23) The Notifying Party submits that from a demand-side perspective, for datacentre customers, GPUs are one acceleration choice amongst many and that the competition between accelerators is fierce. The Parties submit that GPUs cannot perform any processing that cannot also be performed by CPUs, FPGAs, ASICs, and other accelerators. Customers would consider all datacentre processing options, not only GPUs, which competitively constrains GPUs. No accelerator, or even any category of accelerators, is essential and indispensable to any datacentre. When designing and constructing their datacentres, customers will consider the processing needs of the datacentre as a whole. Datacentre customers compare all processing options in accordance with multiple variables, most notably price, performance, efficiency, and scalability. (9) Additionally, the Notifying Party submits that no accelerator option is always best suited for all applications, or even for a given type of application. (10)

(24) Moreover, the Notifying Party submits that the different types of datacentre accelerators are also constrained by cloud-based computing, for the following reasons. On the one hand, when customers compare the overall price and performance of datacentres, they take into account both building a system on their own premises and buying cloud-based datacentre computing. This would in turn constrain the GPU’s pricing and commitment to innovation. On the other hand, GPU sales to cloud service providers are constrained by the in-house solutions these providers develop. (11)

(25) The Notifying Party submits that there is also supply-side substitution. Several suppliers of datacentre processing have developed products that perform parallel processing, and act as accelerators, even if the suppliers do not name the products GPUs. (12) Finally, the Notifying Party claims that suppliers of GPUs for use beyond datacentres can also easily supply datacentre GPUs. This is because both types of GPUs are based on the same fundamental architecture. (13)

4.2.2.3. Results of the market investigation and Commission’s assessment

(26) As a preliminary remark, the market investigation confirmed that discrete GPUs for datacentres and discrete GPUs for gaming are part of different markets. (14) While the two types of GPUs are based on the same architecture, (15) they have different levels of performance, due to technical limitations of the GPUs for gaming. (16) Moreover, contractual restrictions and driver support prevent purchasers of NIVIDIA’s discrete GPUs for gaming from deploying these in datacentres. (17) As a result, the majority of datacentre customers does not consider discrete GPUs for datacentres and discrete GPUs for gaming as substitutes. (18)

(27) As for the different types of datacentre processors, such as integrated GPUs, CPUs, FPGAs or ASICs, the responses to the market investigation showed that competitors, OEMs and most end customers consider different types of accelerators to be suitable for different kinds of HPC and deep leaning applications.(19) They are therefore likely not part of the same market as discrete GPUs for datacentres. (20)

(28) Competitors and OEMs indicated that there are specific datacentre parallel workloads for which GPUs have become the standard acceleration solution. They submitted that for the most powerful high performance computers as well as for AI applications, a combination of CPUs and GPUs achieves the maximum efficiency. End customers would therefore prefer it to CPU-only architectures. (21)

(29) Several CSPs submitted that some high performance cloud computing customers specifically ask for GPU accelerated servers for certain types of workloads, such as deep learning, physic simulation or molecular modelling. These customers would not be willing to perform these compute-intensive workloads on servers with other types of acceleration.(22) This could be because the workload of these customers is already optimized for NVIDIA’s GPUs (e.g., using NVIDIA’s Compute Unified Device Architecture (“CUDA”)). (23) Moreover, for some applications, the total cost of ownership is lower when using GPUs, while for other applications, other types of accelerators (TPUs, FPGAs) have a total cost of ownership advantage. (24) Therefore, the CSPs consider that in most cases, GPUs and other types of accelerators are not substitutable when considering the specific applications they are meant to serve.

(30) The above was confirmed by the replies of end customers. For most HPC applications, the majority of end-customers considered only discrete GPUs, integrated GPUs and adding more CPUs as suitable alternatives. (25) However, they considered both integrated GPUs and adding more CPUs to have certain limitations in comparison with discrete GPUs. End customers pointed out that integrated GPUs mainly support light acceleration tasks, while adding more CPUs entails adding expense and power consumption in comparison to using discrete GPUs. (26)

(31) Moreover, for demanding parallelised workloads, ASICs and FPGAs are not considered suitable alternatives. (27) This is because ASICs are typically designed for very specific workloads and only for a particular customer. Since they lack flexibility and built-in software environment, they are not a suitable option for customers with limited resources who need to perform a range of different HPC workloads. (28)

(32) As for FPGAs, the respondents to the market investigation considered that while they are highly flexible and can be programmed to run parallelized workloads, they are unsuitable for most HPC applications. This is because, when compared to GPUs, FPGAs are relatively inefficient in terms of energy consumption, cost more and are more difficult to program. (29)

(33) End customers confirmed that when they decided to acquire a GPU accelerated server in the past, no other type of accelerator was considered a suitable alternative. (30) All end customers that replied to the question confirmed that for all GPU accelerated datacentres they procured between 2017 and 2019, they procured the GPUs from NVIDIA and were either not open to any alternative accelerated processing solutions or considered only GPUs from AMD as an alternative. (31)

(34) Based on the above, the Commission considers that there is a separate product market for discrete GPUs for datacentres, which does not include other types of datacentre processing solutions.

4.2.3. Geographic market definition

(35) The Commission has not assessed the geographic scope of the market for discrete GPUs for datacentres in past decisions. However, it has concluded that the market for CPUs (and possible segments thereof) (32) as well as the market for FPGAs (33) is worldwide in scope.

(36) The Notifying Party submits that the relevant geographic market for datacentre processing should be defined as worldwide. (34) This should remain the case even for potentially narrower product markets. (35)

(37) The majority of competitors, OEMs and end customers that replied to the market investigation confirmed that accelerated processing solutions are supplied on a worldwide basis, irrespective of the location of the component vendor or the location of the end-customer. (36) Moreover, the majority of competitors and OEMs and all end customers that expressed a view confirmed that the conditions of competition do not differ depending on the location of the datacentre of the end customer. (37)

(38) In light of the results of the market investigation, for the purposes of this decision, the Commission considers that the geographic market for discrete GPUs for datacentres is worldwide in scope.

4.3. Datacentre network interconnects

4.3.1. Introduction

(39) Datacentre’s network interconnects enable the transfer of data between servers or systems, e.g., connecting multiple servers together or connecting a server in a datacentre to a storage appliance.

(40) Network interconnects are made up of the following main components: (i) NICs that are used in the server, enabling it to communicate with other devices on the network; (ii) switches and routers that manage communications between servers; (38) (iii) cables that connect devices together and carry the data signals between devices; and (iv) supporting software.

(41) Network interconnects can be based on a variety of protocols, some of which are based on open standards (e.g., Ethernet, InfiniBand and Fibre Channel (“FC”)), while others are custom or proprietary (e.g., Cray’s Aries/Gemini/Slingshot, Atos Bull’s BXI, and Fujitsu’s Tofu) (39). The latter are currently not available to external server OEMs, they are only sold within their supplier’s own systems.

(42) The technical parameters considered by customers when selecting an interconnect solution include inter alia bandwidth, latency, interoperability, congestion control, deployment. Based on the results of the market investigation, bandwidth and latency are particularly importance parameters for HPC customers. (40) Bandwidth is a measure of how much data can be sent and received at a time, which is a critical factor given it measures the capacity of a network. Latency measures the time required to transmit a packet across a network. In addition to these factors, customers would consider total cost of ownership, as well as other parameters such as the quality of service.

(43) Mellanox’s products are based on the Ethernet and InfiniBand protocols. Mellanox offers network interconnect integrated systems and components, which include NICs, switches, cables, and related software.

4.3.2. Product market definition

4.3.2.1. Commission precedents

(44) There are no Commission precedents defining the relevant markets for the datacentre network interconnect products manufactured and supplied by Mellanox.

(45) Several Commission decisions however address transactions involving network interconnect switch suppliers. In Broadcom/Brocade, (41) the Commission distinguished between the two main components of Fibre Channel SAN networks, i.e. switches and adapters. As regards switches, the Commission has previously considered a segmentation based on the different protocols and network technologies that they support.

(46) In addition, in a previous decision, (42) the Commission stated that the majority of respondents to its market investigation were of the view that the SerDes (serializer/deserializer) intellectual property used in high-speed NICs should be placed in different markets “for each standard speed (1G, 10G, 25G, 50G and the future 100G.)”.

4.3.2.2. Notifying Party’s views

(47) The Notifying Party submits that the relevant product market is the market for datacentre network interconnect, which includes all types of datacentre network interconnect solutions and all individual components.

(48) First, the Notifying Party submits that, from a demand-side perspective, Mellanox’s InfiniBand and Ethernet products are substitutable with datacentre interconnect products based on other protocols. This is based on the following main arguments:

· All of the competing protocols serve the same purpose and function and there are no technical differences between the major interconnects that would limit their substitutability. In particular, technically, Ethernet as well as other protocols are similar to InfiniBand on every relevant parameter, including, in particular, bandwidth and latency.

· Customers typically invite bids from different suppliers for different datacentre network interconnect solutions as illustrated by a number of examples of bids in which Mellanox’s Ethernet- and InfiniBand-based solutions competed directly against network interconnect solutions based on other protocols.

· Customers can and do switch from one protocol to another for newer generation datacentres as illustrated by a number of examples of customers having switched away from InfiniBand. In addition, customers can implement modules within a datacentre that use different network interconnects given that datacentre networking products comply with open standards and are interoperable.

(49) Second, the Notifying Party submits that there is a high degree of supply side substitutability for different interconnect solutions. Specifically, the Notifying Party explains that the barriers to enter another interconnect solution are quite low from the perspective of an existing supplier. In this respect, the Notifying Party estimates that suppliers can switch between the development and supply of different network interconnect solutions with an investment of less than USD […] and over a period of around […] years. The Notifying Party also describes examples of suppliers having abandoned InfiniBand and other interconnect technologies in favour of Ethernet, noting that these suppliers possess the technology and knowledge to readily switch back to these interconnect technologies.

(50) Third, the Notifying Party submits that, even though it may be conceivable from a demand-side perspective, it is not appropriate to define separate markets for each of the components that make up network interconnects given that competition among providers of datacentre components occurs at the “interconnect solutions” level. According to the Notifying Party this is also supported by the following supply-side substitutions considerations: (i) the know-how and technical skills for different components of interconnect solutions are similar and transferrable and (ii) some interconnect competitors (e.g., Cray) price and sell their offerings at the system level, not the component level.

(51) Fourth, the Notifying Party submits that the market for datacentre network interconnect should not be further sub-segmented based on bandwidth. In particular, the Notifying party considers that it would be incorrect to define a market for Ethernet NICs that support bandwidths of 25 Gb/s or higher since both other network interconnect protocols and Ethernet NICs that support bandwidth of below 25 Gb/s exercise competitive constraints on 25 Gb/s+ Ethernet NICs.

(52) With respect to the competitive pressure exercised by Ethernet NICs that support bandwidth of below 25 Gb/s, the Notifying Party argues that the same hardware can be used for Ethernet NICs of different speeds and that a customer can therefore combine several 10 Gb/s NICs as an alternative to match the performance of a 25 Gb/s+ NIC. In addition, Mellanox reports that it has experienced situations where customers declared a strong preference for deploying 25 Gb/s+ Ethernet solutions in a datacentre node, but based on cost, convenience and other factors ultimately deployed 10 Gb/s solutions.

4.3.2.3. Commission’s assessment

(53) The Commission has considered a potential segmentation of the market for datacentre network interconnects between the various protocols and network technologies that they support. In particular, the Commission has considered a distinction between high performance fabrics and Ethernet-based network interconnects. The Commission has also considered further potential sub- segmentations within high performance fabrics and Ethernet-based network interconnects.

(1) Distinction between protocols

(54) Among the different protocols used for running datacentre network interconnects, the Commission has considered a potential distinction between, on the one hand, high performance fabrics and, on the other hand, Ethernet based network interconnects.

(55) Ethernet is the most widely used protocol for network interconnect solution around the world. It is also the fastest growing interconnect protocol. Ethernet products are supplied by companies including Mellanox, Intel, Broadcom, Arista, Cisco, and Juniper. (43) Ethernet-based network interconnect solutions are available for a range of speed including speeds delivering high performance of above 25 Gb/s. In addition, Ethernet suppliers have developed new protocols to combine Remote Direct Memory Access (“RDMA”) technology with Ethernet (namely RDMA over converged Ethernet (“RoCE”) and iWARP protocols) allowing to reduce the latencies for Ethernet.

(56) Besides Ethernet-based network interconnects, a number of suppliers offer high performance fabrics. (44) High performance fabrics are integrated systems of custom hardware including NICs, switches, and cabling. They are designed to run on custom protocols and orchestrated by custom software. High-performance fabrics typically enable reliable high-speed communications across several hundreds or thousands of nodes. The different fabric components are designed to work together as part of the integrated fabric and are orchestrated by sophisticated software. High performance fabrics include Mellanox’s InfiniBand and Intel’s Omni-Path, as well as custom datacentre network interconnects based on protocols that are compatible with Ethernet such as Cray’s Aries/Gemini/Slingshot, Bull’s BXI and Fujitsu’s Tofu.

(57) Based on its market investigation, the Commission identifies two distinct product markets for (i) high performance fabrics and (ii) Ethernet-based network interconnects. This conclusion is based on the limited substitutability between high performance fabrics and Ethernet-based network interconnects, both from a demand- side and supply-side perspective.

(58) First, from a demand-side perspective, while the performance of Ethernet-based systems has increased in the recent years, this performance remains significantly inferior to the performance achieved by high performance fabrics and in particular by Mellanox’s InfiniBand on a number of key parameters. Based on the results of the market investigation, the main performance gap between high performance fabrics and Ethernet-based solutions concerns latency. (45) Indeed, a large number of customers and competitors explained that Ethernet-based solutions do not constitute a suitable alternative to high performance fabrics because of significantly lower performance in terms of latency. Certain respondents further explained that low latency requirements in customer’s specifications de facto exclude Ethernet-based solutions from certain HPC tenders. (46)

(59) For example, a large OEM explained: “for some applications, low latency is a necessity and so is a high performance fabric”. (47) With respect to the question whether Ethernet-based solution could be an alternative, this OEM further explained: “as a general principle, Ethernet is unsuitable for low latency modules. Ethernet will therefore generally not be a possible substitute for applications requiring the level of performance which Mellanox’s InfiniBand can deliver”.

(60) Mellanox’s own data also confirms the gap in latency between high performance fabrics and Ethernet based solutions since these show that the latency of Mellanox’s most advanced Ethernet NIC is […] the latency achieved by its InfiniBand products. (48)

(61) Second, respondents to the Commission’s market investigation confirm that there is no demand-side substitutability between high performance fabrics and Ethernet- based solutions for a significant portion of HPC datacentres applications. All competitors, all OEMs and a majority of end-customers who expressed their views consider that there are applications and workloads for which their company would only consider high performance fabrics as suitable. (49) These are typically high-end HPC and AI deep learning training applications, which require large systems combining many hundreds or thousands of nodes and for which low latency and high bandwidth is particularly important. (50)

(62) A number of universities and research centres further explained that their server clusters must be capable of accommodating a broad range of HPC workloads, including workloads requiring low latency for which only high performance fabrics are suitable. (51) Since low latency is a requirement at least for certain workloads, these customers explained that they consider high performance fabrics as the only possible choice for equipping the HPC server clusters that they operate.

(63) In addition, two major OEMs provided data on the last ten GPU accelerated datacentres (or GPU accelerated server clusters within datacentres) connected with Mellanox’s network interconnects that they have installed/equipped. (52) For all datacentres equipped with InfiniBand fabrics, these OEMs confirmed that they had no suitable network interconnect alternatives. In particular, they confirmed that they did not see Ethernet-based network interconnects as suitable alternatives.

(64) Third, several competitors and customers having indicated that there are certain HPC applications for which only high performance fabrics are suitable further specified that even RoCE-enabled Ethernet solutions with a speed of 25 Gb/s or higher do not currently achieve performance equivalent to InfiniBand in particular in terms of latency. (53) These respondents explained that while RoCE-enabled Ethernet solutions may be suitable for low-end HPC applications that do not demand the highest performance levels offered by high performance fabrics such as InfiniBand, these solutions do not currently constitute an alternative for a large number of other HPC applications and complex AI machine learning training work.

(65) Fourth, Mellanox’s internal documents also [BUSINESS SECRETS – Information redacted regarding business strategy]. (54) In addition, [BUSINESS SECRETS – Information redacted regarding business strategy].

(66) Fifth, high performance fabrics differ from Ethernet-based network interconnects because they are sold almost exclusively as integrated systems whereas Ethernet- based network interconnects are typically sold as individual components. While customers sourcing Ethernet-based network interconnects have the possibility to mix-and-match between several different suppliers (e.g., buying NICS from one supplier and switches from another), this possibility is, in principle, not available for customers wanting to source high performance fabrics.

(67) This is confirmed by Mellanox which explains that: [BUSINESS SECRETS – Information redacted regarding business strategy]. (55)

(68) In addition, the Commission considers that there is only limited supply-side substitutability between Ethernet-based solutions and high performance fabrics, as shown by the following elements.

(69) First, the results of the market investigation show that there is no credible prospect that a competitor of Mellanox with Ethernet-based network interconnects would develop and start offering a high performance fabric that would be able to compete successfully with Mellanox’s latest generation InfiniBand fabric within less than a year. (56) Based on the replies from competitors, launching a competitive InfiniBand fabric or another type of high-performance fabric would take at least three years and would require costs significantly higher than the Notifying Party’s estimate reproduced above. A number of competitors also mentioned Intel’s Omni-Path failure as an example of the difficulty to enter the market for high performance fabrics.

(70) Second, while certain respondents envisage that further technological progress may allow Ethernet-based network interconnect systems to become suitable alternative to high performance fabrics in the future, they acknowledge that this is currently uncertain. In this respect, beyond Slingshot, which some end-customers considered as Ethernet-based, the majority of end-customers and competitors do not believe that a competing Ethernet fabric will emerge within the next 2-3 years that would be able to compete successfully with InfiniBand. (57)

(2) Segmentation within high performance fabrics

(71) Within high performance fabrics, the Commission has considered potential segmentations (i) between different protocols, (ii) between the various components composing high performance fabrics and (iii) based on bandwidth.

(a) Distinction between protocols within high performance fabrics

(72) The Commission has considered a further distinction within high performance fabrics based on protocols. In particular, the Commission has considered a potential separate relevant product market for InfiniBand high performance fabrics which could also potentially include Omni-Path given that this proprietary, high performance communication architecture developed and owned by Intel, is rooted from the InfiniBand technology Intel acquired from QLogic in 2012.

(73) Based on the results of the market investigation, there are indication that customers wanting to procure InfiniBand fabric would not consider any other alternative high performance fabrics (see above).

(74) However, for the purpose of this decision, the question whether high performance fabrics should be further distinguished based on protocols can be left open since it does not materially change the Commission's assessment.

(b) Distinction between individual components within high performance fabrics

(75) With respect to a potential distinction between individual components, as explained above, a key characteristic of high performance fabrics versus for example Ethernet- based network interconnect systems is that fabrics are integrated systems of custom hardware designed to run on custom protocols and orchestrated by custom software. There is therefore only very limited or no interoperability between individual components across the different custom protocols for high performance. In addition, […], the various components composing high performance fabrics are almost always sold together to final customers. This is also confirmed by several customers. (58)

(76) Based on these elements, the Commission considers that there is no need to further distinguish high performance fabrics based on the individual components composing the fabrics.

(c) Distinction based on bandwidth within high performance fabrics

(77) With respect to a potential distinction based on bandwidth, Mellanox currently offers InfiniBand interconnect solutions with a bandwidth of 100 Gb/s and 200 Gb/s. (59) By contrast, other high performance fabrics currently only support speeds of 100 Gb/s.

(78) The Commission’s market investigation confirm that bandwidth is an important parameter for the choice of network interconnect systems in particular in an HPC context. (60) One large OEM selling solutions equipped with InfiniBand fabrics to a large number of end customers considers that InfiniBand customers typically specify the exact bandwidth they require. (61) Another large OEM mentions that customers wanting to purchase InfiniBand typically expect to be offered the latest generation of this product with the highest bandwidth available. (62)

(79) However, for the purpose of this decision, the question whether high performance fabrics should be further distinguished based on bandwidth can be left open as it does not materially change the Commission's assessment.

(3) Segmentation within Ethernet-based network interconnects

(80) The Commission has assessed a potential segmentation between the various components composing Ethernet-based network interconnects. For each individual component, the Commission has also assessed a potential sub-segmentation based on bandwidth/speed.

(a) Distinction between individual components

(81) As explained above, in previous decisions, the Commission distinguished between the two main components of Fibre Channel SAN networks, i.e. switches and adapters (NICs). As regards switches, the Commission has previously considered a segmentation based on the different protocols and network technologies that they support.

(82) In line with these precedents, the Commission considers that each of the main individual components composing Ethernet-based network interconnects – i.e., NICs, switches, and cables (63) – constitute a separate product market. In particular, the Commission identifies a separate relevant product market for Ethernet NICs. This conclusion is based on the following elements.

(83) First, as mentioned above, the Notifying Party acknowledges that a distinction between the components making up Ethernet-based network interconnects may be conceivable from a demand-side substitutability perspective.

(84) Second, the results of the Commission’s market investigation confirm that the various components composing Ethernet network interconnect systems fulfil different functions and are not substitutable from the perspective of the customer. For example, a network interconnect supplier explains: “Network interface Cards (NICs) are not a substitute for switches, and are not always sold together either”. (64)

(85) Third, a large majority of end customers having expressed their views explained that they do not express a preference as to whether all components composing the network interconnect systems should be procured from one single supplier as a packaged system. Customers explain that they want to leave competition as open as possible. (65) They would therefore typically not require that OEMs procure full Ethernet systems from one single supplier as long as OEMs guarantee the interoperability of the system. A number of customers also explain that they want to be able to select the best individual components. For example, a customer explains that it evaluates each network component “on its individual merit”. (66)

(86) Fourth, contrary to what is the case for components of high performance fabrics, OEMs also confirm that they source Ethernet network interconnect components both on a standalone basis and as integrated systems. (67)

(87) Fifth, from a supply-side perspective, there are clear limits to the transferability of know-how and technology between Ethernet switches and NICs. In the first place, while Mellanox is a technology leader for Ethernet NICs which translates in high market shares for Ethernet NICs with a bandwidth speed of 25 Gb/s or higher, it does not hold a similarly strong position in Ethernet switches where its market share is [0-5]%. (68) Conversely, although Cisco is the world’s largest provider of Ethernet based network switches, based on the Notifying Party’s estimate it only has a market share of around [5-10]% in Ethernet NICs with a bandwidth speed of 25 Gb/s or higher. This is indicative of different market conditions and/or different technology requirements between the two main Ethernet network interconnect components, i.e., NICs and switches.

(88) In the second place, a majority of competitors having expressed their view explain that a supplier of Ethernet NICs with a bandwidth speed below 25 Gb/s would need significant investment and time in order to develop and launch Ethernet NICs of 100 Gb/s with RoCE capability that would be able to compete successfully with Mellanox’s ConnectX-6 100 Gb/s Ethernet NICs. (69) Based on this feedback, the barrier to entry for a supplier only active in Ethernet switches and/or cables but not supplying NICs would be even higher.

(b) Ethernet NICs of 25 Gb/s and higher vs. Ethernet NICs below 25 Gb/s

(89) The term NIC generally refers to a network interface controller, which is a hardware adapter that allows a computer to communicate with a network. In normal datacentre applications, Ethernet NICs are primarily differentiated by the speed at which they can transfer data (bandwidth), which is measured in gigabits per second. Ethernet NICs used in servers support speeds of 1, 10, 25, 50, 100 and now even 200 Gb/s.

(90) As explained above at paragraph 46, in a previous decision, the Commission has already considered a distinction according to bandwidth speed with respect to the SerDes (serializer/deserializer) intellectual property used in high-speed NICs.

(91) In line with this precedent, based on its market investigation, the Commission finds that there is a separate product market for Ethernet NICs with a bandwidth speed of 25 Gb/s or higher which is distinct from the market for Ethernet NICs with a bandwidth speed of less than 25 Gb/s. This conclusion is based on the following elements.

(92) First, from the demand-side perspective, a large majority of end customers and OEMs having expressed their views confirm that there are certain applications, performance needs, or mix of workloads for which customers would only consider Ethernet NICs of at least 25 Gb/s. (70) For example, several customers reported that the need for bandwidth speed of at least 25 Gb/s is particularly acute for hyperscale (71) customers, i.e. mainly cloud service providers. (72)

(93) With respect, specifically, to the possibility to use more 10 Gb/s NICs to achieve the performance of a 25 Gb/s, a customer explained that this would not be an optimal solution because: “using less speed NIC for ex 10 Gbs will triple the amount of NICs wires and will entail use of more complex (port capacity) switches”. (73)

(94) Second, based on their recent procurement activity (last two years), all OEMs having expressed their views explained that when they delivered/installed GPU-accelerated servers connected with Mellanox Ethernet NICs of at least 25 Gb/s, the only other speeds that could also have been suitable were higher speeds. (74) One OEM notably explained that there may be some substitutability from the customer perspective between a given speed level and the level just below. However, this OEM further explained that this is only the case for speeds above 25 Gb/s. According to this OEM, while a customer willing to purchase 50 Gb/s NICs could potentially consider 40 Gb/s NICs as a suitable alternative, a customer willing to purchase 25 Gb/s NICs would not consider NICs with a speed of 10 Gb/s.

(95) Third, competitors also confirm that it is not credible to compete against Mellanox’s Ethernet NICs with a speed of 25 Gb/s or more with Ethernet NICs with a speed of 10 Gb/s. For example, a competitor explains: “If an end-user has requested an Ethernet NIC of 25 Gb/s or above, [this competitor] considers that it would not be possible to credibly compete with an Ethernet NIC of 10 Gb/s against any of the products listed under Questions 22.1 to 22.4. (75) The reason is that the end-user would have an identified need that generally cannot be met by an Ethernet NIC of 10 Gb/s”. (76)

(96) Fourth, Mellanox’s recent internal documents presented to the board [BUSINESS SECRETS – Information redacted regarding business strategy]. (77) This segment corresponds to NICs with a speed of 25 Gb/s or higher. Such a segment is also discussed separately [BUSINESS SECRETS – Information redacted regarding business strategy]. (78)

(97) In addition, the Parties’ internal documents [BUSINESS SECRETS – Information redacted regarding business strategy] describe and discuss what the Parties identified as [BUSINESS SECRETS – Information redacted regarding business strategy]. (79) In particular, NVIDIA noted that this [BUSINESS SECRETS – Information redacted regarding business strategy]. (80)

(98) Fifth, from a supply-side perspective, a majority of competitors consider that there are significant barriers in terms of time and costs for a supplier of Ethernet NICs with a bandwidth speed below 25 Gb/s to develop and launch Ethernet NICs of 100 Gb/s with RoCE capability that would be able to compete successfully with Mellanox’s ConnectX-6 100 Gb/s Ethernet NICs. (81) Intel also explains that whereas there might be supply-side substitution between 25 Gb/s and higher bandwidth, “[t]he 25 Gbps technology is foundational to higher Ethernet speeds, as the 50 Gbps and 100 Gbps Ethernet NICs achieve their speeds through multiple 25 Gbps lanes”. (82)

(c) Other Ethernet components (switches and cables)

(99) Ethernet switches and Ethernet cables could potentially be further segmented based on bandwidth between switches and cables of 25 Gb/s and higher on the one hand and switches and cables below 25 Gb/s.

(100) However, for the purpose of this decision the precise product market definition can be left open, as the Transaction does not raise serious doubts as to its compatibility with the internal market or the functioning of the EEA Agreement with regard to Ethernet switches and Ethernet cables, and any segments therein, under any plausible market definition.

(4) Conclusion

(101) The Commission considers that there are two distinct product markets for (i) high performance fabrics and (ii) Ethernet-based network interconnects.

(102) Within the market for high performance fabrics, the Commission does not consider a further segmentation based on (a) different components meaningful. The question whether high performance fabrics should be further segmented based on (b) protocols or (c) bandwidth/speed can be left open, since any such further segmentation would not materially change the Commission's assessment in this case.

(103) As for the Ethernet-based interconnects, the Commission considers that each of the main individual components composing Ethernet-based network interconnects – i.e., NICs, switches, and cables – constitute separate product markets. In particular, the Commission identifies a separate relevant product market for Ethernet NICs. The Commission considers that this market should be further segmented in a separate product market for Ethernet NICs with a bandwidth speed of 25 Gb/s or higher, which is distinct from the market for Ethernet NICs with a bandwidth speed of less than 25 Gb/s. Finally, for the purpose of this decision, the precise product market definition for Ethernet switches and Ethernet cables, as well as the question whether these markets should be further segmented, can be left open, as the Transaction does not raise serious doubts as to its compatibility with the internal market under any market that the Commission considers plausible.

4.3.3. Geographic market definition

(104) In previous decisions, the Commission has considered that the geographic market for all categories of network interconnect products (including IP/Ethernet switches and routers) to be either EEA-wide or worldwide in scope. (83) In Broadcom/Brocade,84 respondents to the market investigation unanimously considered that the geographic market for IP/Ethernet switches and routers was worldwide given the global nature of both supply and demand.

(105) The Notifying Party submits that the relevant geographic market for datacentre network interconnects should be defined as worldwide. (85) This should remain the case even for potentially narrower product markets. (86)

(106) The majority of competitors, OEMs and end customers that replied to the market investigation confirmed that network interconnects are supplied on a worldwide basis, irrespective of the location of the component vendor or the location of the end- customer. (87) Moreover, the majority of competitors and the large majority of end customers that expressed a view confirmed that the conditions of competition do not differ depending on the location of the datacentre of the end customer. (88)

(107) In light of the results of the market investigation, for the purposes of this decision, the Commission considers that the geographic scope of the various markets for network interconnect products is worldwide.

4.4. Datacentre servers

4.4.1. Introduction

(108) Datacentres are a collection of servers that are connected by a network and that work together to process/compute workloads. As such, servers are the computing power of datacentres. (89) They typically contain CPUs, network interconnects and optional accelerators together in a system.

(109) NVIDIA offers a family of server systems (DGX-1, DGX-2 and DGX-Station) that perform GPU-accelerated AI and deep learning (“DL”) training and inference applications, among others. The main building block of the DGX servers is HGX, which combines a number of NVIDIA GPUs, connected with NVLINK and NVSwitches, enabling them to function as a single unified accelerator. (90) GDX-1 contains the HGX-1, a board with eight Tesla V100 GPUs, Core Intel Xeon CPUs, and four InfiniBand NICs. DGX-2 contains the HGX-2, which includes 16 NVIDIA Tesla V100 GPUs, dual-socket Intel Xeon CPUs, and eight InfiniBand NICs. (91)

(110) According to the Notifying Party, the DGX family is a “reference architecture” platform for NVIDIA to continue to innovate and demonstrate GPU innovations to server OEMs/ODMs, thereby generating demand for its GPUs. NVIDIA provides that innovation and the building blocks of its DGX servers to OEMs, ODMs and CSPs to use in their own server offerings. In addition, NVIDIA offers DGX servers for sale, but, according to the Notifying Party, these servers are not intended to displace any sales from NVIDIA’s OEM/ODM partners. (92)

4.4.2. Product market definition

4.4.2.1. Commission precedents

(111) In past decisions, the Commission has considered a segmentation of datacentre servers by price band: (a) entry level (below USD 100 000), (b) mid-range (USD 100 000 – USD 999 999), and (c) high-end (USD 1 million and above). The Commission ultimately left the product market decision open. (93)

(112) In Dell/EMC, the Commission noted that the market investigation did not provide a clear result as to a possible segmentation of datacentre servers by operating systems or by the applications they serve. (94)

4.4.2.2. Notifying Party’s views

(113) The Notifying Party considers that the relevant product market should encompass all datacentre servers. (95) However, in this case, the precise product market definition can be left open. (96)

(114) First, according to the Notifying Party, all datacentre servers have the same functions: they are used to process data in the datacentre, which can be achieved with differently priced servers. However, if a further division of the market for datacentre market were necessary, the Notifying Party submits that a segmentation according to price band would be the most sensible, as it would be in line with the Commission’s precedents. In that situation, the Notifying Party considers that NVIDIA’s DGX servers would belong to the potential market for mid-range servers. (97)

(115) Second, the Notifying Party argues that the market for datacentre servers should not be segmented according to the particular workloads they serve because (i) suppliers do not know which applications end-customers will accelerate with their server and (ii) there is no workload that only a highly accelerated server (such as NVIDIA’s DGX server) could handle. (98)

4.4.2.3. Commission’s assessment

(116) The Commission has considered whether the market for datacentre servers could be further segmented according to price bands or to the applications/end uses for which the datacentre servers are designed or used. During the market investigation, these possible market segmentations were tested.

(117) First, the market investigation regarding a possible segmentation according to price bands provided mixed views. While a number of respondents considered that this segmentation was appropriate, others disagreed with it. For example, a few respondents indicated that such segmentation is “typically used” or is “quite common” and “similar to what IDC provides”. (99) However, Tech Data Europe (OEM) explained that “it is not necessary to define segments as narrowly as high- end, mid-range and low-end servers, as customers switch between servers of different types, and distributors typically sell all types”. (100) Similarly, HPE stated that “[t]here are many different reasons that a server may be prices in a certain way and all servers in a similar price bands are not substitutes for one another”. (101)

(118) Second, the majority of the respondents to the market investigation that expressed a view did not consider that a segmentation of datacentre servers based on the applications/end use they serve is appropriate. (102) For example, an OEM explained that “a segmentation by type of application is not appropriate as servers may be used across multiple applications and customers usually do not request significantly different types of servers based on the use-case.” (103) Similarly, an end customer indicated that “any size [of servers] can serve any applications”. (104) Moreover, some of the respondents that expressed a view listed a number of suppliers offering alternatives to NVIDIA’s DGX servers, including, for example, HPE, IBM, Dell, Intel, Atos and Lenovo. (105) Finally, competitors such as Oracle and IBM confirmed that it would be relatively easy for them to start supplying datacentre servers offering the same level of performance or that would be suitable for the applications/end uses for which DGX servers are used. (106)

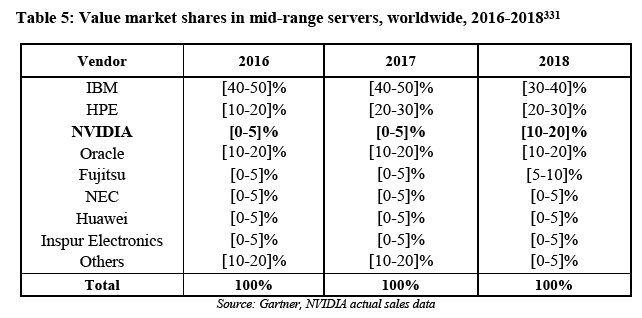

(119) In light of the above, the Commission considers that the market for datacentre servers should not be segmented according to the applications/end uses for which the datacentre servers are designed or used. In addition, the Commission considers that, for the purpose of this decision, it can be left open whether the market for datacentre servers should be further segmented according to price bands, as the Transaction does not raise serious doubts as to its compatibility with the internal market in any plausible product markets, even in a plausible mid-range server market where NVIDIA’s position would be stronger.

4.4.3. Geographic market definition

(120) In past decisions, the Commission found the market for datacentre servers to be at least EEA-wide if not worldwide. (107)

(121) The Notifying Party submits that the relevant geographic market for datacentre servers should be defined as worldwide. (108) This should remain the case even for a potentially narrower product market for mid-range servers. (109)

(122) The results of the market investigation indicate that the geographic scope of the market for datacentre servers is most likely worldwide. (110) A number of OEMs confirmed that they sell datacentre servers worldwide. Moreover, OEM respondents explained that “[t]there are few country-specific reasons which would prevent us from looking at the market global”, “[t]transport costs are low” and “prices are similar or identical and customers procure servers on an EEA-wide or even worldwide level”. (111) Similarly, end customers confirmed that “clients are international” and that the servers they use are made available worldwide by global suppliers. (112)

(123) In light of the results of the market investigation, for the purposes of this decision, the Commission considers that the geographic scope of the datacentre server markets (including a potential market for mid-range servers) is worldwide.

5. COMPETITIVE ASSESSMENT

5.1. Analytical framework

(124) Under Article 2(2) and (3) of the Merger Regulation and Annex XIV to the EEA Agreement, the Commission declares a proposed concentration incompatible with the internal market and with the functioning of the EEA Agreement if that concentration would significantly impede effective competition in the internal market or in a substantial part of it, in particular through the creation or strengthening of a dominant position.

(125) Under Article 57(1) of the EEA Agreement, the Commission declares a proposed concentration incompatible with the EEA Agreement if that transaction creates or strengthens a dominant position as a result of which effective competition would be significantly impeded within the territory covered by the EEA Agreement or a substantial part of it.

(126) In this respect, a merger may entail horizontal and/or non-horizontal effects. Horizontal effects are those deriving from a concentration where the undertakings concerned are actual or potential competitors of each other in one or more of the relevant markets concerned. Non-horizontal effects are those deriving from a concentration where the undertakings concerned are active in different relevant markets.

(127) The Commission appraises non-horizontal effects in accordance with the guidance set out in the relevant notice, that is to say the Non-Horizontal Merger Guidelines. (113)

(128) As regards non-horizontal mergers, two broad types of such mergers can be distinguished: vertical mergers and conglomerate mergers. (114) Vertical mergers involve companies operating at different levels of the supply chain. (115) Conglomerate mergers are mergers between firms that are in a relationship that is neither horizontal (as competitors in the same relevant market) nor vertical (as suppliers or customers). (116)

(129) In this particular case, the Transaction does not give rise to any horizontal overlaps between the Parties' activities, but results in a vertical and a conglomerate relationship. Accordingly, the Commission will only examine whether the Transaction is likely to give rise to non-horizontal effects. In particular, the Commission will assess potential conglomerate and vertical effects.

5.2. Conglomerate non-coordinated effects

(130) NVIDIA and Mellanox are active in closely related markets. They both supply components used in datacentres or server clusters, in particular those used for HPC. NVIDIA’s discrete datacentre GPUs equip servers that constitute certain (parts of) datacentres (also referred to as GPU-accelerated server clusters) to accelerate a number of applications, typically computations that require massive parallel execution of relatively simple computational tasks. They are necessarily used side- by-side with CPUs, which are always present in datacentre servers. Servers within datacentres are connected to each other through network interconnect solutions, offered among others by Mellanox, and composed of network cables, connecting the NICs within servers to network switches. NVIDIA’s discrete datacentre GPUs and Mellanox’s network interconnect solutions are therefore complementary components. which can be purchased directly or indirectly via OEMs/ODMs by the same set of customers for the same end use (HPC datacentres).

(131) In this decision, the Commission carries out three assessments as regards the conglomerate relationships identified above.

(132) The first assessment consists in determining whether the Transaction would likely confer on the Merged Entity the ability and incentive to leverage Mellanox’s potentially strong market position in both the market for high-performance fabric (with its InfiniBand fabric) and in the market for Ethernet NICs of at least 25 Gb/s into the discrete datacentre GPU market, and whether this would have a significant detrimental effect on competition in the discrete datacentre GPU market, thus causing harm to customers.

(133) The second assessment consists in determining whether the Transaction would likely confer on the Merged Entity the ability and incentive to leverage NVIDIA’s potentially strong market position in the discrete datacentre GPU market into any possible network interconnect markets, and whether this would have a significant detrimental effect on competition in the network interconnect markets, thus causing harm to customers. This assessment is done overall, rather than for each potential network interconnect product market that could potentially be the target of the Merged Entity’s leveraging strategy. This is because most of the competitive assessment is similar irrespective of the exact network interconnect product. The only difference relates to the assessment of the Merged Entity’s incentive. The Commission will consider the various interconnect products when assessing the Merged Entity’s incentive.

(134) The third assessment consists in determining whether the Merged Entity would likely have the ability and incentive to misuse commercially sensitive information that it obtains from competing GPU and network interconnect suppliers (in the context of cooperation with these competitors to ensure interoperability of their respective products) to favour its own position on the discrete datacentre GPU and/or network interconnects relevant markets.

5.2.1. Legal framework

(135) According to the Non-Horizontal Merger Guidelines, in most circumstances, conglomerate mergers do not lead to any competition problems. (117)

(136) However, foreclosure effects may arise when the combination of products in related markets may confer on the merged entity the ability and incentive to leverage a strong market position from one market to another closely related market by means of tying or bundling or other exclusionary practices. (118)

(137) In assessing the likelihood of conglomerate effects, the Commission examines, first, whether the merged firm would have the ability to foreclose its rivals, second, whether it would have the economic incentive to do so and, third, whether a foreclosure strategy would have a significant detrimental effect on competition. In practice, these factors are often examined together as they are closely intertwined. (119)

(138) Mixed bundling refers to situations where the products are also available separately, but the sum of the stand-alone prices is higher than the bundled prices. (120) Tying refers to situations where customers that purchase one good (the tying good) are required also to purchase another good from the producer (the tied good). Tying can take place on a technical or contractual basis. (121) Tying and bundling as such are common practices that often have no anticompetitive consequences. Nevertheless, in certain circumstances, these practices may lead to a reduction in actual or potential rivals’ ability or incentive to compete. Foreclosure may also take more subtle forms, such as the degradation of the quality of the standalone product. (122) This may reduce the competitive pressure on the Merged Entity allowing it to increase prices. (123)

(139) In order to be able to foreclose competitors, the merged entity must have a significant degree of market power, which does not necessarily amount to dominance, in one of the markets concerned. The effects of bundling or tying can only be expected to be substantial when at least one of the merging parties’ products is viewed by many customers as particularly important and there are few relevant alternatives for that product. (124) Further, for foreclosure to be a potential concern, it must be the case that there is a large common pool of customers, which is more likely to be the case when the products are complementary. (125)

(140) The incentive to foreclose rivals through bundling or tying depends on the degree to which this strategy is profitable.(126) Bundling and tying may entail losses or foregone revenues for the merged entity. (127) It may also increase profits by gaining market power in the tied goods market, protecting market power in the tying good market, or a combination of the two. (128)

(141) It is only when a sufficiently large fraction of market output is affected by foreclosure resulting from the concentration that the concentration may significantly impede effective competition. If there remain effective single-product players in either market, competition is unlikely to deteriorate following a conglomerate concentration. (129) The effect on competition needs to be assessed in light of countervailing factors such as the presence of countervailing buyer power or the likelihood that entry would maintain effective competition in the closely related markets concerned. (130)

5.2.2. Affected markets

(142) NVIDIA is active in the discrete datacentre GPU market, while Mellanox is active in the various network interconnect markets (depending on the exact segmentation) which are neighbouring markets closely related to the discrete datacentre GPU markets.

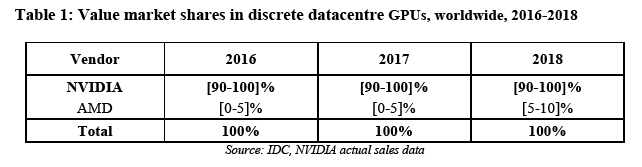

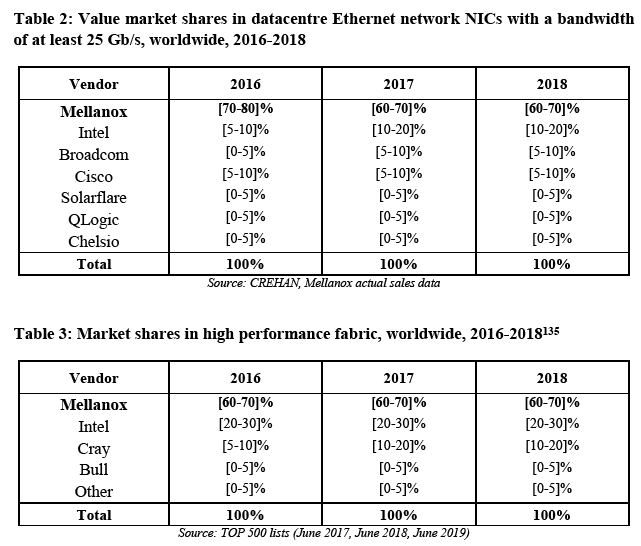

(143) Table 1 below presents NVIDIA’s and its competitors’ market shares on a worldwide market for discrete datacentre GPUs from 2016 to 2018. It follows that NVIDIA has a market share of [90-100]%. Mellanox is active in various network interconnect markets that are neighbouring markets closely related to the discrete datacentre GPU market. As a result, the discrete datacentre GPU market as well as all possible relevant network interconnect markets are affected.

(144) Currently, NVIDIA has only one competitor (AMD) in the discrete datacentre GPU market, which has so far only managed to gain [5-10]% market share in 2018 (see Table 1 above). However, according to the Notifying Party, the discrete datacentre GPU market is characterised by two major, recent developments, that are radically transforming its competitive dynamics.

(145) The first is the rise of AMD. A long-time participant in the GPU segment for gaming GPUs, AMD is leveraging its gaming Radeon GPU architecture for datacentre GPUs. In November 2018, AMD launched a new discrete datacentre GPU model: Radeon Instinct, which competes directly with NVIDIA’s datacentre GPUs. At the same time, AMD announced an ambitious product roadmap including the next Instinct generation as well as a commitment to on-going launches at a “predictable cadence with generational performance gains.” (131) AMD’s emergence as a strong competitor is evidenced by its recent successes at the top-levels of HPC computing. For instance, AMD announced that the upcoming Frontier datacentre at Oak Ridge National Laboratory will rely on AMD’s Radeon Instinct GPUs (and AMD EPYC CPUs). This will be the fastest datacentre in the world when it comes online in 2021 – and will run scientific, AI, and data analytics workloads. Beyond this success, AMD has also recently announced other top-end datacentre wins, including European datacentres. (132)

(146) The second is the entry of Intel. As explained by Intel, Intel is developing a new GPU to compete with NVIDIA’s GPUs for computational workloads in datacentres. Intel intends to enter in two stages. It plans to release a discrete GPU for graphics rendering workloads on PCs in 2020. Intel expects that this product, which will be the first new entry into the GPU market in nearly two decades, will also have limited deployment in datacentres. Intel plans to follow that with the release in 2021 of a discrete datacentre GPU designed specifically for computational uses in servers (also known as a “GPGPU”, short for general purpose GPU). (133) According to the Notifying Party, Intel’s entry as a credible competitor of NVIDIA is evidenced by its recent win in the tender organised by the U.S. Department of Energy for the upcoming Aurora datacentre at Argonne National Laboratory. This will be one of the fastest datacentres in the world by 2021 when it comes online. The U.S. Department of Energy reportedly selected Intel Xe GPUs (and Intel Xeon CPUs). (134)

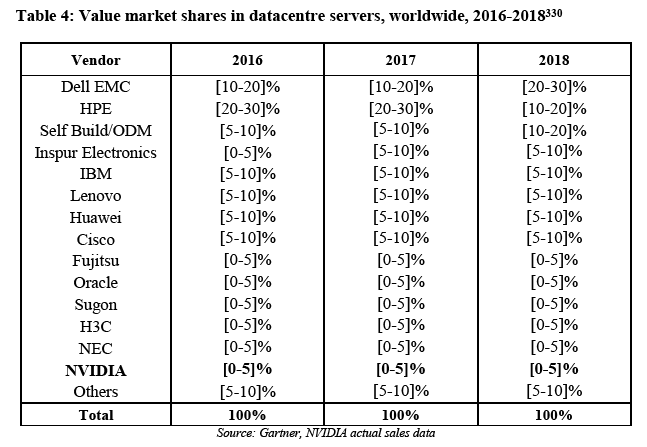

(147) Tables 2 and 3 below present Mellanox’s and its competitors’ market shares in the market for Ethernet network NICs with a bandwidth of at least 25 Gb/s and in the market for high-performance fabric (where Mellanox is active with its InfiniBand fabric).

(148) As can be seen from Tables 2 and 3 above, Mellanox has a market share of [60-70]% in the market for high-performance fabric (with its InfiniBand fabric) and of [60- 70]% in the market for Ethernet NICs of at least 25 Gb/s. In all other plausible network interconnect market segments, Mellanox’s market share is low and in any event significantly lower than 30%. (136) As NVIDIA is active in the discrete datacentre GPU market, which is closely related to both the markets for high- performance fabric and for Ethernet NICs of at least 25 Gb/s, it can be concluded that both the discrete datacentre GPU market as well as the markets for high- performance fabric and for Ethernet NICs of at least 25 Gb/s are affected.

5.2.3. Leveraging the position of Mellanox in the markets for high-performance fabric and for Ethernet NICs of at least 25 Gb/s into the discrete datacentre GPU market where NVIDIA is active

5.2.3.1. Potential concern

(149) The Commission has assessed a potential competition concern whereby the Merged Entity would leverage Mellanox’s potentially strong position in the plausible markets for high performance fabric and for Ethernet NICs of at least 25 Gb/s, into the market for discrete datacentre GPUs where NVIDIA is active and thereby foreclose competitors on the discrete datacentre GPUs market, thus causing harm to customers.

(150) The Commission has assessed in particular the ability and the incentive of the Merged Entity to engage in the following tying/bundling practices:

· technical tying: differentiating the degree of technical compatibility and therefore overall performance of the Merged Entity’s joint solution compared to mix-and-match solutions involving only one of its products; and/or

· contractual tying: imposing the purchase of NVIDIA GPUs if the customer wants to purchase Mellanox’s InfiniBand fabric and/or Ethernet NICs of at least 25 Gb/s; and/or

· mixed bundling: incentivising the joint purchase of the Merged Entity’s own products by offering higher prices for mix-and-match solutions involving only one of its products as compared to the bundle.

(151) Both AMD (137) and Intel raised concerns that they may be foreclosed from the discrete datacentre GPU markets due to one or a combination of the three practices described above. In particular, Intel claims that, for demanding HPC and AI deep learning training server deployments, customers require their servers to be connected with a high performance fabric and/or Ethernet NICs of at least 25 Gb/s and that customers do not have credible alternatives to Mellanox. (138)

(152) The Commission has assessed specifically whether the Merged Entity would have the ability and incentive to foreclose enough discrete datacentre GPU market output to hinder Intel’s effective long-term entry and AMD’s expansion into the discrete datacentre GPU market.

(153) The reason why the Commission has assessed the potential leveraging from two distinct markets together is that GPU-accelerated servers, depending on the requirements of the end-customers, are in practice connected with various types of interconnect solutions. In particular, some GPU-accelerated server clusters are connected with high-performance fabrics (including Mellanox’s InfiniBand fabric), while others are connected with Ethernet network interconnect solutions composed among others of NICs of different speeds, including 25 Gb/s and above. NICs are particularly important as far as interoperability between GPUs and the overall network interconnect is concerned, because NICs are the piece of hardware allowing the various servers within a datacentre to communicate with each other. When servers are accelerated with GPUs, NICs may need to interact directly with GPUs.

5.2.3.2. Notifying Party’s view

(154) The Notifying Party submits that the Merged Entity will not have the ability and incentive to leverage Mellanox’s potentially strong market position in any plausible markets into the market for discrete datacentre GPUs where NVIDIA is active. In any event, the Notifying Party submits that any putative leveraging could not lead to anticompetitive foreclosure of NVIDIA’s rivals. The reasons are the following.

(1) As regards ability

(a) As regards Mellanox’s alleged market power

(155) First, the Notifying Party argues that the Merged Entity will not have the ability to anticompetitively leverage Mellanox’s market position post-Transaction, because Mellanox lacks market power in the supply of network interconnect products. Even in the narrow market segments identified in Section 4.3.2. which are limited to (i) high performance interconnect fabrics, and (ii) Ethernet NICs with a data speed of 25 Gb/s or higher, the Notifying Party argues that Mellanox is subject to strong competitive constraints.

– As regards Mellanox’s InfiniBand fabric

(156) In the first place, the Notifying Party argues that Mellanox’s InfiniBand fabric is subject to significant competitive constraints from other high-performance fabrics, such as Intel’s Omni-Path, Cray’s Aries, Gemini and Slingshot, and Fujitsu’s Tofu. In particular, the Notifying Party argues that Cray’s Slingshot fabric will compete strongly with InfiniBand in the foreseeable future. Also, according to the Notifying Party, while Intel has discontinued the next generation of Omni-Path, it is maintaining support for the existing products and Mellanox expects that it will continue to exercise real constraint for at least the next two years.

(157) In the second place, the Notifying Party argues that Mellanox’s InfiniBand fabric is subject to significant competitive constraints from Ethernet. According to the Notifying Party, InfiniBand is no longer protected by any material technical advantages, such as low latency, from Ethernet competition. Therefore, the Notifying Party claims that there is no application for which a particular type of interconnect solution, such as InfiniBand, would be the only option available to customers.

(158) According to the Notifying Party, Mellanox has actually contributed to this trend with the launch of RDMA over converged Ethernet (“RoCE”). RDMA provides direct memory access from the memory of one host to the memory of another host while reducing the burden on the Operating System and CPU. This boosts performance and reduces latency. With RoCE, Mellanox shared this InfiniBand advantage with Ethernet, thus accelerating Ethernet’s uptake. In addition, and on account of customers’ preferences, Mellanox made this technology open-source, allowing all Ethernet suppliers to take advantage of it.

– As regards Mellanox’s Ethernet NICs of at least 25 Gb/s

(159) In the first place, the Notifying Party argues that even on a narrowly defined market for Ethernet NICs with a speed of 25 Gb/s and more, Mellanox faces significant and growing competitive constraints which are not yet fully visible in a backward- looking/static market share analysis. Mellanox was the first producer to launch an Ethernet NIC of 25 Gb/s and above. It started in 2012. Since then, new providers have entered the market, including Intel, Cisco, Broadcom, and Chelsio taking away market share from Mellanox.

(160) In the second place, the Notifying Party submits that some of Mellanox’s largest historical customers are now building their own Ethernet NICs. This includes […]. These companies used to source Ethernet NICs from Mellanox and others but have since decided to build their own NICs in-house. Others can follow their lead. These “in-house” solutions also exert competitive pressure on Mellanox, which is not reflected at all on market share data.

(161) In the third place, the Notifying Party argues that [BUSINESS SECRETS – Information redacted regarding business strategy].

(b) As regards the Merged Entity’s ability to engage in tying/bundling practices

(162) Second, as regards technical tying, the Notifying Party claims that there are no practicable means through which the Parties could degrade interoperability between Mellanox’s network interconnect and the discrete datacentre GPUs of NVIDIA’s competitors’.139 Moreover, the Notifying Party claims that it is commercially necessary to continue promoting interoperability and compatibility with third parties, in particular with Intel and AMD, because the Parties are dependent on the CPU makers, who have the truly indispensable products that form the backbone of any system, and who could retaliate.140 The Merged Entity would not have the technical ability to degrade its competitors’ performance because datacentres use open standards and systems that the Parties do not control.

(163) Third, the Notifying Party argues that the procurement structure of this industry precludes the ability to leverage. In the first place, the Notifying Party argues that end-customers are large, sophisticated enterprises that exert considerable countervailing buyer power in a bidding market with credible alternatives. In the second place, the Notifying Party argues that the Parties mainly sell through intermediaries (OEMs and ODMs), limiting the possibility to engage in mixed bundling strategies, given that OEMs could easily buy the “bundle” from the Parties but then sell the components separately to their customers. In the third place, customers often buy processing and network interconnect products in distinct transactions, not synchronously, again limiting the possibility to engage in mixed bundling strategies, as the combined entity would need to make separate offers for the different types of products, in order to conform with the customer’s purchasing practices. (141)

As regards incentives

(164) According to the Notifying Party, the Merged Entity would also not have the incentive to degrade the interoperability of Mellanox’s InfiniBand fabric and/or Ethernet NICs of at least 25 Gb/s with third parties’ discrete datacentre GPUs or to raise Mellanox’s products’ relative price when combined with third party GPUs.

(165) First, the Notifying Party argues in general terms that the Parties have strong commercial incentives to continue interoperating with other datacentre component suppliers, including their rivals. According to the Notifying Party, by fostering compatibility, datacentre component suppliers contribute to growing both the overall market and their addressable share of it. This is particularly so because OEMs have a strong preference for using components that interoperate with other components (including the OEM’s own). The prevalence of standardized interfaces, such as Peripheral Component Interconnect Express (“PCIe”), and protocols, like Ethernet, illustrates the necessity for suppliers to maintain interoperability for their products to be viable. (142)

(166) Second, the Notifying Party argues that the cost of foreclosing suppliers of rival processing solutions in terms of lost interconnect sales would outweigh any benefit from increased GPU sales. However, the only scenario considered by the Notifying Party is a scenario whereby the Merged Entity would refuse all together to sell Mellanox’s interconnects unless a customer also buys NVIDIA’s GPUs. The Notifying Party claims that this would be unprofitable because many Mellanox customers do not need GPUs. (143)